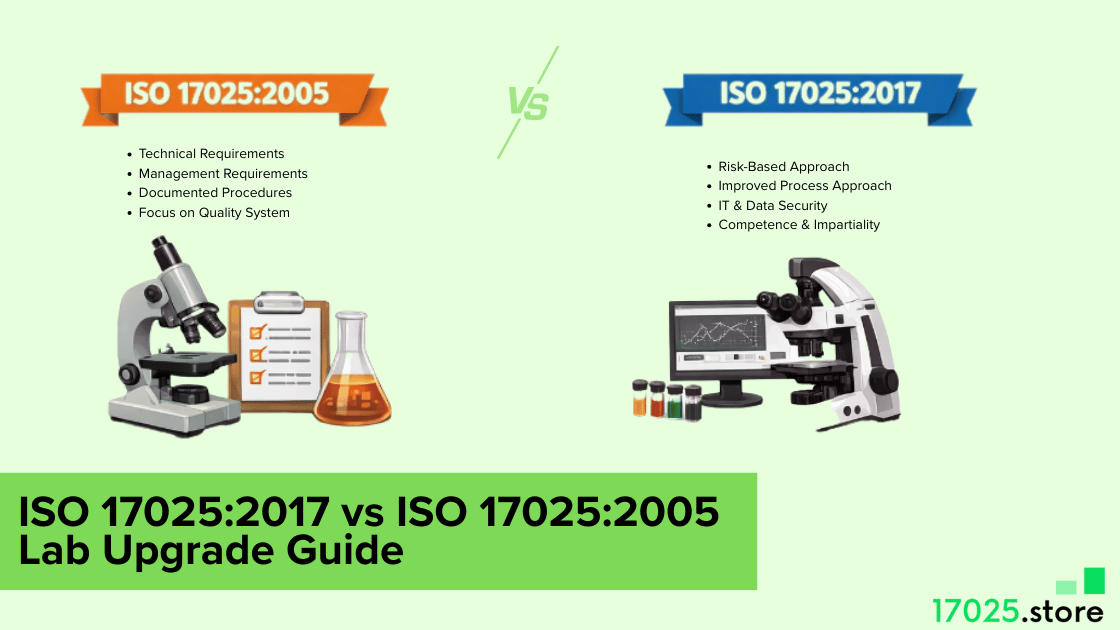

ISO 17025:2017 vs ISO 17025:2005 is the shift labs actually feel during audits, not a simple rewrite. ISO/IEC 17025 is the competence standard for testing and calibration labs. This guide compares the 2005 and 2017 editions in lab terms, not clause jargon. You will see what truly changed, what audit evidence now needs to look like, and how to upgrade fast without rebuilding your whole system.

2005 focused on documented procedures. 2017 focuses on governance, risk control, and defensible reporting decisions. That single shift explains why audits now feel more like tracing a job trail than checking a manual.

A lab does not “pass” ISO 17025 by having more documents. A lab passes by producing results you can defend, with evidence that is retrievable, consistent, and impartial. That is why the 2017 revision matters in practice. Instead of rewarding procedure volume, it pushes outcomes, risk control, and traceable decision logic. The clean way to win audits is to compare what auditors accepted in 2005 with what they now try to break in 2017, then build evidence that survives stress.

Quick Comparison

Both editions still demand competent people, valid methods, controlled equipment, and technically sound results. What shifts is how the standard expects you to run the system and prove control.

Think of the key changes as three moves: tighter front-end governance, stronger operational risk control, and sharper reporting discipline. Digital record reality also gets treated as a real control area rather than “admin.”

2017 vs 2005: Structure Changes

In 2005, “Management” and “Technical” requirements. 2017 reorganizes requirements into an integrated flow that starts with governance and ends with results. This supports a clearer process approach, which makes audits feel like tracing a job through your system rather than checking whether a document exists.

What Changed In 2017

2017 is less interested in whether you wrote a procedure and more interested in whether your system prevents bad results under real variation.

Three shifts drive most audit outcomes. Governance comes first through impartiality and confidentiality controls. Risk-based thinking becomes embedded in how you plan and operate, instead of living as a preventive-action habit. Reporting becomes sharper when you state pass or fail, because decision logic must be defined and applied consistently.

Digital control is the silent driver behind many nonconformities. Information technology is no longer a side note because results, authorizations, calculations, and records typically live in LIMS, spreadsheets, instruments, and shared storage.

Minimum Upgrade Set: If you only strengthen one layer, strengthen the traceability of evidence. Make every reported result trace back to a controlled method version, authorized personnel, verified equipment status, and a reviewed record trail you can retrieve in minutes.

What Did Not Change

Core competence still wins. You still need technically valid methods, competent staff, calibrated and fit-for-purpose equipment, controlled environmental conditions where relevant, and results that can be traced and defended. The difference is that 2017 expects those controls to be provable through clean job trails and consistent decision-making, not just described in procedures.

Audit-Driving Differences

Most gaps show up when an auditor picks a completed report and walks backward through evidence. That single trail exposes what your system actually controls.

The fastest way to close real gaps is to design evidence around the failure modes auditors repeatedly uncover.

- Impartiality is tested like a technical control, not a policy statement. Failure mode: a conflict exists, but no record shows it was assessed.

- Risk-based thinking must appear where results can degrade, like contract review, method change, equipment downtime, and data handling. Failure mode: risk is logged generically, while operational risks stay unmanaged.

- Option A and Option B must be declared and mapped so responsibilities do not split or vanish between systems. Failure mode: “ISO 9001 handles it,” it is said, but no mapped control exists.

- Information technology integrity must be demonstrable across tools, including access, edits, backups, and review discipline. Failure mode: a spreadsheet changed, but no one can prove what changed and why.

- Decision rule use must be consistent when you claim conformity, especially where uncertainty influences pass or fail. Failure mode: the same product passes one week and fails the next under the same rules.

ISO 17025:2017 vs ISO 17025:2005 Audit Impact Mini-Matrix

| Area | 2005 Typical Pattern | 2017 Audit Focus | Evidence That Closes It |

| Governance | Policies existed | Impartiality managed as a live risk | Impartiality risk log + periodic review record |

| Risk Control | Preventive action mindset | Risk-based thinking embedded in operations | Risk entries tied to contract, method, data, equipment |

| Management System | Manual-driven compliance | Option A vs Option B clarity | Declared model + responsibility mapping |

| Data Systems | Forms and files | Information technology integrity | Access control + change history + backup proof |

| Reporting | Results issued | Decision rule consistency | Defined rule + review check + example application |

Micro-Examples

A testing lab updates a method revision after a standard change. Under audit, the pressure point is not “did you update the SOP?” The pressure point is whether analysts were re-authorized for the new revision, whether worksheets and calculations match the revision, and whether report review confirms the correct method version was used. Failure mode: method changed, but authorization stayed old.

A calibration lab finds an overdue reference standard after a calibration was issued. Under audit, the expectation is an impact review: which jobs used the standard, whether results remain valid, whether re-issue or notification is required, and how recurrence is prevented through system control. Failure mode: the standard was overdue, but no traceable impact logic exists.

Evidence Pack Test

A fast way to compare your system against 2017 expectations is to run one repeatable test.

Pick one recently released report and trace the full evidence chain: request review, method selection, competence authorization, equipment status, environmental controls where relevant, calculations, technical review, and release. Then check whether impartiality and confidentiality were actually considered for that job and whether evidence is retrievable without “asking around.”

Use a measurable benchmark to keep this honest: if a report trail takes more than 3 minutes to retrieve, your system is not audit-ready. That is not a paperwork problem. It is a control design problem.

30-Day Upgrade Path

Speed comes from narrowing the scope. Upgrade what changes audit outcomes, then expand only if you need to.

- Start with a small sample of recent reports across your highest-risk work, covering at least one case per method family.

- Standardize job trail storage so the report links cleanly to method version, authorization, equipment status, and review evidence.

- Embed risk-based thinking into contract review, method change, equipment failures, and data integrity controls.

- Harden information technology control where results are created or stored, including access, edits, backups, and spreadsheet review.

- Lock reporting discipline with a defined decision rule approach, then prove consistency through review records and examples.

After that month, any sampled report should be traceable in minutes, not hours. Once that capability exists, audits become predictable because your evidence behaves like a system.

FAQ

Is ISO 17025:2005 still used for accreditation?

Most accreditation and assessment expectations align with the 2017 edition. A lab operating on 2005-era habits will still be judged by 2017-style evidence and governance control.

What is the biggest difference between the editions?

Governance and effectiveness carry more weight, while document volume carries less weight. Results must be defensible through traceable job trails and consistent decision logic.

Do testing and calibration labs experience the changes differently?

System expectations stay the same, but calibration often feels more pressure on equipment status discipline, traceability chains, uncertainty use, and conformity statements.

Where do labs usually fail first in 2017 audits?

Common failures cluster around method version control, authorization by scope, data integrity in spreadsheets or LIMS, and inconsistent reporting decisions.

How should a small lab start without overbuilding?

Trace one report end-to-end, fix the evidence chain, then repeat with a small sample until retrieval and decision consistency are stable.

Conclusion

Treat ISO 17025:2017 vs ISO 17025:2005 as a shift in how you prove control, not a reason to generate more paperwork. Build job trails that survive report-trace audits, manage governance and risk where results can degrade, and lock reporting discipline so claims stay consistent under scrutiny. When evidence retrieval becomes fast and repeatable, the system becomes audit-ready by design rather than by effort.