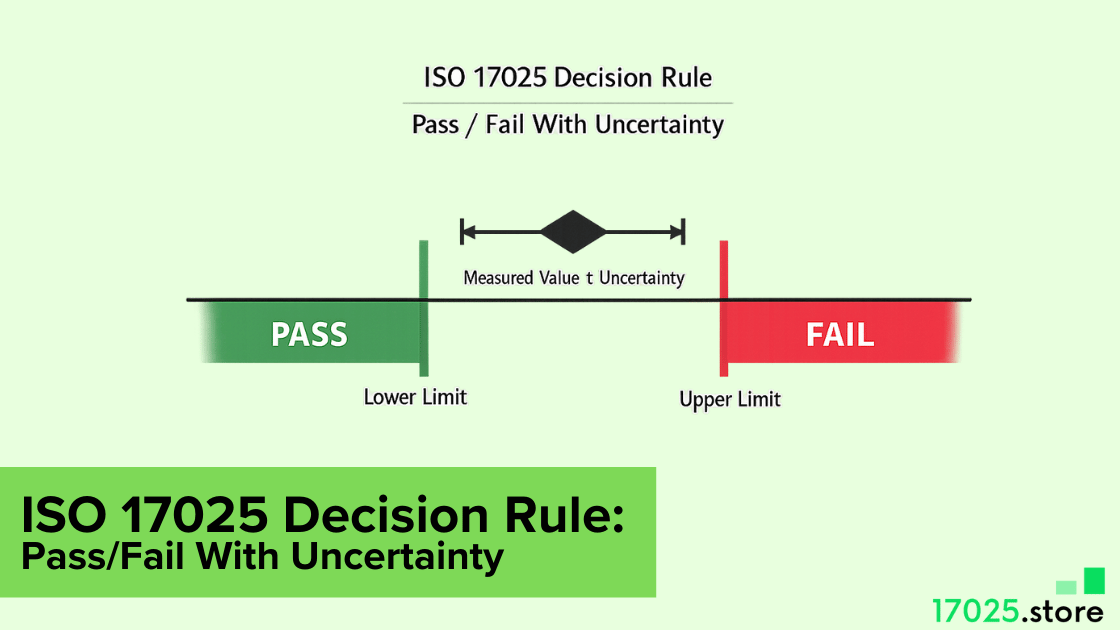

A decision rule decides how you declare pass or fail when uncertainty exists. This page explains how to choose an ISO/IEC 17025 decision rule, agree on it during contract review, and report it cleanly. You also get clause-linked tables you can reuse in your procedure and on certificates.

Labs do not lose audits because uncertainty exists. They lose audits because the rule is unclear, the customer did not agree, or the report language cannot be defended. In practice, you need one rule that fits the job, then a repeatable way to apply it every time you issue a conformity call.

Decision Rules In ISO/IEC 17025

Definition: This is the rule your lab uses to convert a measured value plus its uncertainty into a compliance decision against a stated limit.

Application: Start by fixing three inputs during contract review. You need the specification limit, the uncertainty you will report at that point, and the style of conformity call you will issue. When the product standard already defines the rule, the lab uses that rule and records it as the agreed basis.

Where teams go wrong is mixing rules. They declare one line item pass using measured value only, then tighten decisions on another line item using a safety margin. That inconsistency is the first thing customers and auditors challenge.

Clause Table

| Clause | Purpose | Lab Document | Entry To Include | Record Location |

| 7.1.3 | Agreement on the decision basis before work starts | Contract Review Procedure / Quote Template | Decision method, uncertainty basis used for the call, and boundary handling for borderline results | Quote file, contract review record, or job order notes |

| 7.8.6 | Reporting conformity calls with a clear scope | Report Template / Reporting Procedure | Conformity claim, the requirement used, and the results the claim covers | Report body plus controlled template revision history |

Statement Of Conformity

Definition: A statement of conformity is the plain-language claim on a report or certificate that an item meets, or does not meet, a stated requirement.

Application: Decide which reporting style you will use, and keep it consistent across the job and across time.

Option A is a direct acceptance rule. You compare the result to the tolerance limit and declare pass or fail. It is fast, but borderline results carry a higher decision risk.

Option B is a guarded acceptance rule. You shrink the acceptance zone by a safety margin, so “pass” is only issued when the result is clearly inside the limit after uncertainty is considered. It reduces false accept risk, but it can increase false rejects near the limit.

Certificate-Ready Lines

- “Conformity is evaluated against [specification] using the agreed decision rule; the claim applies to results listed in [table or section].”

- “For this job, pass is reported only when the result, including expanded uncertainty,y remains within the acceptance limit.”

- “Results in the boundary zone are reported as inconclusive and are not declared compliant or noncompliant.”

Guard Band

Definition: A guard band is the safety margin between the tolerance limit and the acceptance limit that your lab actually uses for the decision.

Application: Treat it as an engineering knob you set, not a sentence you copy. If you want conservative decisions, increase the margin. If the customer accepts more risk, reduce it.

Use a defined acceptance limit (AL) derived from the tolerance limit (TL) and a chosen margin g for an upper limit case:

AL = TL − g

Then use the measured value x and expanded uncertainty U.

| Case | Rule Using x and U | Decision | Risk Control |

| Clear Pass | x + U ≤ AL | Pass | Controls false accept risk |

| Clear Fail | x − U > TL | Fail | Controls false reject ambiguity |

| Boundary Zone | Otherwise | Inconclusive | Forces documented handling of borderline results |

Pass/Fail Table

Use this table to keep the decision rule consistent across quote, execution, and reporting.

| Process Point | Inputs Set | Records Kept | Decision Output | Report Text |

| Contract Review | Limit, uncertainty basis, decision style | Spec revision, agreed rule, boundary handling | Rule agreed, or job declined | The decision basis is recorded in the job acceptance |

| Test Or Calibration | Data quality and uncertainty evaluation method | Result x, expanded uncertainty U, limit TL, acceptance limit AL | Pass, fail, or inconclusive | Decision for each result line item |

| Report Release | Scope of claim and coverage of results | Item IDs, units, and points included in the claim | The same logic applies to all points | One consistent claim line plus scope |

| Complaint Or Appeal | Boundary-zone handling | Review notes, allowed recheck actions, and approvals | Confirm, revise, or withdraw | Traceable change record |

When you implement the ISO/IEC 17025 decision rule this way, you are not just compliant. You are predictable, which customers actually pay for.